The human visual system makes scene interpretation seem easy. We can look out of a window and can make sense of even a very complex scene. This process is very difficult for a machine. As with natural language interpretation, it is a problem of ambiguity. The orientation and position of an object changes its appearance, as does different lighting or colour. In addition, objects are often partially hidden by other objects.

In order to interpret an image, we need both low-level information, such as texture and shading and high-level information, such as context and world knowledge. The former allows us to identify the object, the latter to interpret it according to our expectations.

Because of these multiple levels of conformation, most computer vision is based on a hierarchy of processes, starting with the raw image and working toward a high-level model of the world.

Each stage builds on the features extracted at the stage below:

1. Digitisation:

The aim of computer vision is to understand some scene in the outside world. This may be captured using a video camera, but may come from a scanner (for example, optical character recognition). It will be easier to digitise photographs than to work with real-time video. Also, it is not necessary that images come from visible light. For example, satellite data may use infrared sensing.

For the purposes of exposition, we will assume that we are capturing a visible image with a video camera. This image will need to be digitised so that it can be processed by a computer and also “cleaned up” by signal processing software.

Digitising Images:

For use in computer vision, the image must be represented in a form which the machine can read. The analog video image is converted (by a video digitiser) into a digital image. The digital image is basically a stream of numbers, each corresponding to a small region of the image, a pixel.

ADVERTISEMENTS:

The number is a measure of the light intensity of the pixel, and is called a grey level. The range of possible grey levels is called a grey scale (hence grey-scale image). If the grey scale consists of just two levels (black or white) the image is a binary image.

Figure 14.2, shows an image (a) and its digitised form (b). There are ten grey levels from 0-white to 9-black. More typically there will be 16 or 256 grey levels rather than ten and often 0 is black (no light). However, the digits 0-9 fit better into the picture.

Most of the algorithms used in computer vision work on simple grey-scale images. However, sometimes colour images are used. In this case, there are usually three or four values stored for each pixel, corresponding to either primary colours (red, blue and green) or some other colour representation system.

ADVERTISEMENTS:

The blurring of edges, and other effects conspire to make the very-scale image inaccurate. Some cameras may not generate parallel lines of pixels, the pixels may be rectangular rather than square (the aspect ratio) or the relationship between darkness and grey scale recorded may not be linear. However, the most persistent problem is noise: inaccurate readings of individual pixels due to electronic fluctuations, dust on the lens or even a foggy day!

Thresholding:

Given a grey-scale image, the simplest thing we can do is to threshold it; that is, select all pixels whose greyness exceed some value. This may select key significant features from the image.

Thresholding can be used to recognise objects. For example, faults in electrical plugs can be detected using multiple threshold levels. At some levels the wires are selected, allowing us to check that the wiring is correct, at others the presence of the fuse can be verified.

ADVERTISEMENTS:

We can also use thresholding to obtain a simple edge detection. We can simply follow round the edge of a thresholded image. And this be done without actually performing the thresholding as we can simply follow pixels where the grey changes from the desired value. This is called contour following. Contour following would give a good start for image understanding. The more robust approaches will instead use the rate of change in intensity- slope rather than height-to detect edges.

2. Signal Processing:

i. Digital Filters:

We have noted some of the problems of noise, blurring and lighting effects in processing a signal. Various signal processing techniques which make image interpretation difficult can be applied to the image in order to remove some of the effects of noise or enhance other features, such as edges. The application of such techniques is also called digital filtering.

This is by analogy with physical filters, which enable us to remove unwanted materials, or to find desired material. Thresholding is a simple form of digital filter, whereas thresholding processes each pixel independently, more sophisticated filters also use neighbouring pixels. Some filters go beyond this and potentially each pixel’s filtered value is dependent on the whole image. However, all the filters we will consider operate on a finite window-a fixed-size group of pixels surrounding the current pixel.

ADVERTISEMENTS:

ii. Linear Filters:

Many filters are linear. These work by having a series of weights for each pixel in the window. For any point in the image, the surrounding pixels are multiplied by the relevant weights and added together to give the final filtered pixel value.

In Fig. 14.3., we see the effect of applying a filter with a 3 x 3 window. The filter weights are shown at the top right. The initial image grey levels are at the top left. For a particular pixel the nine pixel values in the window are extracted. These are then multiplied by the corresponding weights, giving in this case the new value 1. This value is placed in the appropriate position in the new filtered image (bottom left).

The pixels around the edge of the filtered image have been left blank. This is because we cannot position a window of pixels 3×3 centred on the edge pixels. So, either the filtered image must be smaller than the initial image, or some special action is taken at the edges.

Moreover some of the filtered pixels have negative values associated with them. Obviously this can only arise if some of the weights are negative. This is not a problem for subsequent computer processing, but the values after this particular filter cannot easily be interpreted as grey levels.

A related problem is that the values in the final image may be bigger than the original range of values. For example, with the above weights, a zero pixel surrounded by nines would give rise to a filtered value of 36. Again, this is not too great a problem, but if the result is too large or too small (negative) then it may be too large to store-an overflow problem. Usually, the weights will be scaled to avoid this.

So, in the example above, the result of applying the filter would be divided by 8 in order to bring the output values within a similar range to the input grey scales. The coefficients are often chosen to add up to a power of 2, as dividing can then be achieved using bit shifts, which are far faster.

iii. Smoothing:

The simplest type of filter is for smoothing an image. That is, surrounding pixels are averaged to give the new value of a pixel. Fig. 14.4., shows a simple 2×2 smoothing filter applied to an image. The filter window is drawn in the middle, and its pivot cell (the one which overlays the pixel to which the window is applied) is at the top left.

The filter values are all ones, and so it simply adds the pixel and its three neighbours to the left and below and averages the four (÷ 4). The image clearly consists of two regions, one to the left with high (7 or 8) grey-scale values and one to the right with low (0 or 1) values.

However, the image also has some noise in it. Two of the pixels on the left have low values and one on the right a high value. Applying the filter has removed these anomalies, leaving the two regions far more uniform, and hence suitable for thresholding or other further analysis.

Because only a few pixels are averaged with the 2×2 filter, it is still susceptible to noise. Applying the filter would only reduce the magnitude by a factor of 4. Larger windows are used if there is more noise, or if later analysis requires a cleaner image. A larger filter will often have an uneven distribution of weights, giving more importance to pixels near the chosen one and less to those far away.

There are disadvantages to smoothing, especially when using large filters the boundary between the two regions becomes blurred (Fig. 14.4.). There is a line of pixels which are at an average value between the high and low regions. Thus, the edge can become harder to trace.

Furthermore, fine features such as thin lines may disappear altogether. There is no easy answer to this problem-the desire to remove noise is in conflict with the desire to retain sharp images. So how do we distinguish a small but significant feature from noise?

iv. Gaussian Filters:

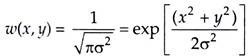

The Gaussian filter is a special smoothing filter based on the bell-shaped Gaussian curve, well known in statistics as the ‘normal’ distribution. We imagine a window of infinite size, where the weight, w(x, y), assigned to the pixel at position x, y from the centre is

The constant a is a measure of the spread of the window-how much the image will be smeared by the filter. A small value of o will mean that the weights in the filter will be small for distant pixels, whereas a large value allows more distant pixels to affect the new value of the current pixel. If noise affects groups of pixels together then we would choose a large value of σ.

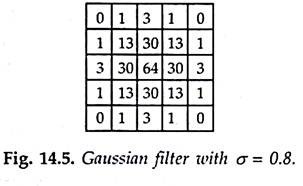

Although the window for a Gaussian filter is theoretically infinite, the weights become small rapidly and so, depending on the value of σ, we can ignore those outside a certain area and so make a finite windowed version. For example, Fig. 14.5., shows a Gaussian filter with a 5 x 5 window; it is symmetric and so the weights decrease towards the edge. This filter has weights totaling 256, but this took some effort! The theoretical weights are not integers, and the rounding errors mean that in general the sum of weights will not be a nice number.

One big advantage of Gaussian filters is that the parameter a can be set to any value yielding finer or coarser smoothing. Simple smoothing methods tend only to have versions getting ‘bigger’ at fixed intervals (3 x 3, 5 x 5 etc.). The Gaussian with σ = 0.7 would also fit on a 5 x 5 window, but would be weighted more towards the centre (less smoothing).

Practical Considerations:

The problems of overflow when computing filtered images, and in general there are various computational factors which influence the choice of filter. Indeed, the cost of image processing is so high that it is often better to use a simple method rather than an optimal one.

Images are large. A 512 x 512 image with 256 grey levels consumes 256 kilobytes of memory. This is expensive in terms of storage, but also those 262144 pixels take a long time to process one by one. A linear filter with a 2 x 2 window takes four multiplications per pixel, a 3 x 3 window takes nine and 5 x 5 takes 25 ! Also, a simple filter with coefficients of ± 1 or powers of 2 can be calculated by simple adds and shifts, further reducing the cost. So, the simple 2 x 2 smoothing filter in Fig. 14.4, although crude, only takes 1 million additions, whereas the Gaussian filter in Fig. 14.5., takes over 6 million multiplications.

One solution is to use special hardware, DSP (Digital Signal Processing) chips or parallel processing. Indeed, our brain works in something like this fashion, with large areas committed to specific tasks such as line detection. It processes the whole image at once, rather than sequentially point by point. Whether or not this is available, depends or the choice of processing method.

The large amounts of storage required make it imperative that algorithms do not generate lots of intermediate images. One way to achieve this is to overwrite the original image as it is filtered. With some very simple filters this is not a problem, but in general we must be careful to avoid overwriting pixels which will be needed. An alternative way to avoid intermediate storage is to work out the effects of multiple steps and to compute them in one step.

3. Edge Detection:

Edge detection is central to most computer vision. There is also substantial evidence that edges form a key part of human visual understanding. An obvious example is the ease with which people can recognise sketches and cartoons. A few lines are able to invoke the full two or three dimensional image. Edge detection consists of two sub-processes. First of all, potential edge pixels are identified by looking at their grey level compared with surrounding pixels.

Then these individual edge pixels are traced to form the edge lines. Some of the edges may form closed curves, while others will terminate or form a junction with another edge. Some of the pixels detected by the first stage may not be able to join up with others to form true edges. These may correspond to features too small to recognise properly, or simply be the result of noise.

Identifying Edge Pixels:

The grey-level image is an array of numbers (grey levels) representing the intensity value of the pixels. It can be viewed as a description of a hilly landscape where the numbers are altitudes. So a high number represents a peak and a low number a valley. Edge detection involves identifying ridges, valleys and cliffs.

These are the edges in the image. We can use gradient operators to perform edge detection by identifying areas with high gradients. A high gradient (that is, a sudden change in intensity) indicates an edge. There are a number of different gradient operators in use.

Gradient Operators:

If we subtract a pixel’s grey level from the one immediately to its right, we get a simple measure of the horizontal gradient of the image.

This two-point filter is shown in Fig. 14.6., (i) together with two alternatives also shown in Fig. are: a four point filter, (ii) which uses a 2 x 2 window, and a six-point filter, (iii) which uses a 3 x 3 window. The vertical version of the six-point filter is also shown (iv).

The effects of the six-point filters are shown in Fig. 14.7.

The image shows the corner of a rectangular region in the bottom right-hand corner. It may be noted how the horizontal gradient operator picks out the left edge of the region and the vertical operator picks out the upper edge. Both operators would detect a diagonal edge, but less efficiently than one in their preferred direction. So, in Fig. 14.8., the pixel values are large, but the filtered values at the edge are smaller and more smeared.

These operators can be useful if edges at a particular orientation are important, in which case we can simply threshold the filtered image and treat pixels with large gradients as edges. However, neither operator on its own detects both horizontal and vertical edges.

Robert’s Operator:

Robert’s operator uses a 2 x 2 window. For each position (x, y), a gradient function, G(x, y), is calculated by

G(x, y) = | f(x, y) – f(x +1, y -1) | + | f(x + 1, y) – f(x, y-1)|

where, f(x, y) is the intensity of the pixel at that position. This is not a simple linear filter, as it involves calculating the absolute value of the difference between diagonally opposite pixels. This is necessary in order to detect lines is all directions.

The results of the gradient function can be compared with a predetermined threshold to detect a local edge.

Consider some examples in Fig. 14.9. (i-iv):

A threshold of 5 would detect (ii) and (iii) as edges; but not (i) or (iv). Let’s look at each example. The first is a constant grey level: there are no edges and none are detected whatever threshold is chosen. The second is a very clear edge running up the image, and it gets the highest gradient of the four examples.

The third example also has quite a strong gradient. It appears to represent an edge running diagonally across the image. The fourth one has dramatic changes in intensity, but a low gradient. This is because there is little overall slope in the image. It represents a sort of ridge going across the picture. This might be a line, a single pixel wide, but not an edge between regions.

Robert’s operator has the advantage of simplicity, but suffers from being very localised and therefore easily affected by noise. For example, (ii) has a high gradient reading and would have been detected as a potential edge, but this is largely based on the bottom right pixel. If this one pixel were wrong, perhaps as a result of random noise, a spurious edge would be detected.

Sobel’s Operator:

Sobel uses a slightly larger 3×3 window, which makes it somewhat less affected by noise. Fig. 14.10., labels the grey levels of the nine pixels.

The gradient function is calculated as:

G =│(c + 2f + i)-(a + 2d + g)│+ │(g + 2h + i)-(a + 2b + c)│

Again, this can be thresholded to give potential edge points.

We may note that the grey level at the pixel itself, e is not used- the surrounding pixels give all the information.

We can see the operator as composed of two terms, a horizontal and a vertical gradient:

H = (c + 2f + i) – (a + 2d + g)

V = (g + 2h + i) – (a + 2b + c)

G = | H| + │V│

The first term, H, compares the three pixels to the right of e with those to the left. The second, V compares those below the pixel with those above. In fact, if we look back at the six-point gradient filters in Fig. 14.6., we will see that V and H are precisely the absolute values of the outputs of those filters.

An edge running across the image will have a large value of V, one running up the image a large value of H. So, once we have decided that a pixel represents an edge point, we can give the edge an orientation using the ratio between H and V. Although we could follow edges simply by looking for adjacent edge pixels, it is better to use edge directions.

It is also possible to give an orientation with Robert’s operator, as the two terms in it correspond to a northwesterly and northeasterly gradient respectively. However, this estimate of direction would be even more subject to noise.

Further, that Sobel’s operator uses each pixel value twice, either multiplying it by two (the side pixels: f, d, h and b) or including it in both terms (the corner pixels: a, c, g and i). However, an error in one of the corner pixels might cancel out, whereas one in the side pixels would always affect the result.

For this reason, a modified version of Sobel’s operator may be used:

G = | (c + f + i) – (a + d + g) | + │ (g + h + i)-(a + b + c)│

On the other hand, there are theoretical reasons for preferring the original operator, so the choice of operator in a particular application is rather a matter of taste!

Laplacian Operator:

An alternative to measuring the gradient is to use Laplacian operator. This is a mathematical measure (written ߜ) of the change in gradient.

Its mathematical definition is in terms of the second differential in the x and y direction (where the first differential is the gradient):

However, for digital image processing, linear filters are used which approximate to the true Laplacian. Approximations are shown in Fig. 14.11, for a 2 x 2 grid and a 3×3 grid.

To see how they work, we will use a one-dimensional equivalent to the Laplacian which filters a one-dimensional series of grey levels using the weights (1, -2, 1). The effect of this is shown in Fig. 14.12. We may see how the edge between the nines and ones is converted into little peaks and troughs. The actual edge detection then involves looking for zero crossings places where the Laplacian’s values change between positive and negative.

It is obvious that in Fig. 14.12, the boundary between the nines and the ones is a 5. The one-dimensional image is slightly blurred. When Robert’s or Sobel’s operators encounter such an edge they are likely to register several possible edge pixel 1 on either side of the actual edge. The Laplacian will register a single pixel in the middle of the blurred edge.

The Laplacian also has the advantage that it is a linear filter and can thus be easily manipulated with other filters. A frequent combination is to use a Gaussian filter to smoothen the image, and then follow this with a Laplacian. Because both are linear filters, they can be combined into a single filter called the Laplacian-of- Gaussian (LOG) filter.

The Laplacian however, does not give any indication of orientation. If this is required then some additional method must be used once an edge has been detected.

Successive Refinement and Marr’s Primal Sketch:

The images are very large and hence calculations over the whole image take a long time. One way to avoid this is to operate initially on coarse versions of the image and then successively use more detailed images to examine potentially interesting features.

For example, we could divide a 512 x 512 image into 8 x 8 cells and then calculate the average grey level over the cell. Treating each cell as a big ‘pixel’, we get a much smaller 64 x 64 image. Edge detection is then applied to this image using one of the methods suggested above.

If one of the cells is registered as an edge then the pixels comprising it are investigated individually. Assuming that only a small proportion of the cells are potential edges then the savings in computation are enormous—the only time we have to visit all the pixels is when the cell averages are computed. This method of successive refinement can be applied to other parts of the image processing process, such as edge following and region detection.

One representation of images, Marr’s primal sketch, uses similar methods to detect features at different levels of detail, but for a very different reason. Instead of averaging over cells, Laplacian-of-Gaussian filters are used with different standard deviations, where small standard deviations correspond to fine detail.

The primal sketch is divided into edges, terminations (ends of edges), bars (regions between parallel edges) and blobs (small isolated regions). In particular, blobs are regions of pixel which register as edges (zero crossings of the Laplacian) at fine resolution, but disappear at high resolution.

We have now identified pixels which may be present on the edges of objects. So far we have ignored them! The next step is to string those pixels together to make lines, that is, to identify which groups of pixels make up particular edges. The basic rule of thumb is that if two suspected edges are connected then they form a single line.

However, this needs to be modified slightly for three reasons:

1. Because of noise, shadows, etc., some edges will contain gaps.

2. Noise may cause spurious pixels to be candidate edges.

3. Edges may end at junctions with other lines.

The first means that we may have to look for more than one pixel ahead to find the next edge point. The other two mean that we have to use the edge orientation information in order to reject spurious edges or detect junctions.

A basic edge-following algorithm is then as follows:

1. Choose any suspected edge pixel which has not already been used.

2. Choose one direction to follow first.

3. Look for an adjoining pixel in the right general direction.

4. If the orientation of the pixel is not too different then accept it.

5. If there is no adjoining pixel scan those one or two pixels away.

6. If an acceptable pixel has been found repeat from 3.

7. If no acceptable pixel is found repeat the process for the other direction.

The pixels found during a pass of this algorithm are regarded as forming a single edge. The whole process is repeated until all edge pixels have been considered.

The output of this algorithm is a collection of edges, each of which consists of a set of pixels. The end points of each edge segment will also have been detected at step 7. If the end point is isolated then it is a termination; if several lie together, or if it lies on another edge, then the end point is at a junction. This resulting set of edges and junctions will be used by Waltz’s algorithm to infer three- dimensional properties of the image.

However, before passing these data on to more knowledge-rich parts of the process, some additional cleaning up is possible. For example, very short edges may be discarded as they are likely either to be noisy or to be unimportant in the final image (for example, texture effects). Also, we can look for edges which terminate close to one another.

If they are collinear and there are no intervening edges then we may join them up to form a longer edge. Also, if two edges with different orientation terminate close together, or an edge terminates near the middle of another edge, then this can be regarded as a junction.

One problem with too much guessing at lower levels is that it may confuse higher levels (the source of optical illusions). One solution is to annotate edges and junctions with certainty figures. Higher levels of processing can then use Bayesian-style inferencing and accept or reject these guesses depending on higher-level semantic information.

4. Region Detection:

In contrast, an oil painting will not have lines drawn at the edges, but will consist of areas of different colours. An alternative to edge detection is to concentrate on the regions composing the image.

Region Growing:

A region can be regarded as a connected group of pixels whose intensity is almost the same. Region detection (or segmentation) aims to identify the main shapes in an image. This can be done by identifying clusters of similar intensities.

The main process is as follows:

1. Group identical pixels into regions, and

2. Examine the boundaries between these regions—if the difference is lower than a threshold, merge the regions.

The result is the main regions of the image.

This process is demonstrated in Fig. 14.13. The first image (i) shows the original grey levels. Identical pixels are merged giving the initial regions in (ii). The boundaries between these are examined and in (iii) those where the intensity is less than 3 are marked for merging. The remainder, those where the difference in intensity is more than 2, are retained, giving the final regions in (iv).

The Problem of Texture:

Texture can cause problems with all types of image analysis, but region growing has some special problems. If the image is unprocessed then a textured surface will have pixels of many different intensities. This may lead to many small island regions within each large region. Alternatively, the texture may ‘feather’ the edges of the regions so that different regions get merged.

The obvious response is to smooth the image so that textures become greys. However, if the feathering is bad, then sufficient smoothing to remove the texture will also blur the edge sufficiently that the regions will be merged anyway.

In a controlled environment, where lighting levels can be adjusted, we may be able to adjust the various parameters (level of smoothing, threshold for merging) so that recognition is possible, but where such control is not easily possible region merging may fail completely.

Representing Regions-Quad-Trees:

In a computer program it is not that straight forward! The simplest representation would be to keep a list of all the pixels in each region. However, this would take an enormous amount of storage.

There are various alternatives to reduce this overhead. One popular representation is quad-trees. These make use of the fact that images often have large areas with the same value-precisely the case with regions. We will describe the algorithm in terms of storing a binary image and then show how it can be used for recording regions.

Start off with a square image where the width in pixels is some power of 2. Examine the image. Is it all black or white? If so, stop, If not, then divide the image into four quarters and look at each quarter.

If any quarter is all black or white, then leave it alone, but if any quarter is mixed, then split it into quarters. This continues until either each region is of one colour, or else one gets to individual pixels-which must be one colour by definition. This process is illustrated in Fig. 14.14.

The first part (i) shows the original image, perhaps part of a black circle. This is then divided and subdivided into quarters in (ii). Finally, in (iii) we see how this can be stored in the computer as a tree data structure. We may note how the 64 pixels of the image are stored in five tree nodes. Of course the tree nodes are more complicated than simple bitmaps and so for this size of image a quad-tree is a little useful, but for larger images the saving can be enormous.

Quad tree representation can be used to record regions in two ways. Each region can be stored as a quad-tree where a black means that the pixel is part of the region. Alternatively, we can use a multi-coloured version of a quad-tree where each region is coded as a different colour. In either case, regions can easily be merged using the quad-tree representation.

Computational Problems:

Region growing is very computationally expensive, involving many passes over the digitised image. Operating on reduced representations such as quad-trees can reduce the number of operations, but at the expense of more complicated data structures. For this reason, Vernon (1991) suggests that region growing is not generally applicable in industrial contexts. Instead, edge detection methods are preferred.

The contrast is easy to see-a 100 x 100 pixel square has 10000 interior pixels, but only 400 on the boundary! What a saving! However, against this we may note that region growing is easily amenable to parallel processing and so the balance between different techniques may change.

5. Reconstructing Objects:

Inferring Three-Dimensional Features:

Edge and region detection identify parts of an image. We need to establish the objects which the parts depict. We can use constraint satisfaction algorithms to determine what possible objects can be constructed from the lines given. First, we need to label the lines in the image to distinguish between concave edges, convex edges and obscuring edges. An obscuring edge occurs where a part of one object lies in front of another object or in front of a different part of the same object.

The convention is to use a ‘+’ to label a convex edge, a ‘-‘ for a concave edge and an arrow for an obscuring edge. The arrow has the object the edge is ‘attached’ to on its right and the obscured object on its left. Fig. 14.15., shows an object with the lines in the image suitably labelled.

How do we decide which labels to use for each line? Lines meet each other at vertices. If we assume that certain degenerate cases do not occur, then we need only worry about trihedral vertices (in which exactly three lines meet at a vertex). There are four types of such vertices, called L, T, fork (or Y) and arrow (or W).

There are 208 possible labellings using the four labels available, but happily only 18 of these are physically possible (see Figs. 14.16 -14.19.). We can therefore use these to constrain our line labelling. Waltz proposed a method for line labelling using these constraints.

Waltz’s Algorithm:

Waltz’s algorithm basically starts at the outside edges of the objects and works inward using the constraints. The outside edges must always be obscuring edges (where it is the background that is obscured).

Therefore, these can always be labelled with clockwise arrows.

The algorithm has the following stages:

1. Label the lines at the boundary of the scene.

2. Find vertices where the currently labelled lines are sufficient to determine the type of the vertex.

3. Label the rest of the lines from those vertices accordingly.

Steps 2 and 3 are repeated either until there are no unlabelled lines (success), or until there are no remaining vertices which are completely determined (failure).

We will follow through the steps of this algorithm attempting to label the object in Fig. 14.15. We start by naming the vertices and labelling the boundary lines. This gives the labelling in Fig. 14.20(i).

We now perform the first pass of steps 2 and 3, a, c, f and h are arrow vertices with the two side arms labelled as boundaries (‘>’). Only type A6 matches this, so the remaining line attached to each of these vertices must be convex (‘+’). Similarly, the T vertex d must be of type T4, hence the line d-k is a boundary. Vertices e and i are already fully labelled, so add less information. The results of this pass are shown in (ii).

On the second pass of steps 2 and 3 we concentrate on vertices j, k and t. Unfortunately, vertex k is not determined yet; it might be of type L1 or L5 and we have to wait until we have more information. However, vertices and t are more helpful: they are forks with one concave line. We see that if one line to a fork is concave it must be of type F1 and so all the lines from it are concave. These are marked in (iii).

As we start the third pass, we see that k is still not determined, but m is an arrow with two concave arms. It is therefore of type A3 and the remaining edge is concave. This also finally determines that k is of type L5. The fully labelled object (iv) now agrees with the original labelling in Fig. 14.15.

Problems with Labelling:

Waltz’s algorithm will always find the unique correct labelling if one exists. However, there are scenes for which there are multiple labelling, or for which no labelling can be found. Fig. 14.21., shows a scene with an ambiguous labelling. The first labelling corresponds to the upper block being attached to the lower one. In the second labelling the upper block is ‘floating’ above the lower one.

If there were a third block between the other two we would be able to distinguish the two, but with no further information we cannot do so. With this scene, Waltz’s algorithm would come to an impasse at stage 2, when it would have unlabelled vertices remaining, but none which are determined from the labelled edges. At this stage, we could make a guess about edge labelling, but whereas the straightforward algorithm never needs to backtrack, we might need to change our guesses as we search for a consistent labelling.

Fig. 14.21., shows the other problem, a scene which cannot be labelled consistently. In this case Waltz’s algorithm would get stuck at step 3. Two different vertices would each try to label the same edge differently. The problem edge is the central diagonal. Reasoning from the lower arm, the algorithm thinks it is convex, but reasoning from the other two arms it thinks it is concave. To be fair, the algorithm is having exactly the same problem as we have with this image. It is locally sensible, but there is no reasonable interpretation of the whole scene.

Given only the set of vertex labelling from Figs. 14.16 – 14.19., there are also sensible scenes which cannot be labelled. A pyramid which has faces meeting at the top cannot be labelled using trihedral vertices. Even worse, a piece of folded cloth may have a cusp, where a fold line disappears completely. These problems can be solved by extending the set of vertex types, but as we take into account more complex vertices and edges, the number of cases does increase dramatically.

We may also note that the algorithm starts with the premise that lines and vertices have been identified correctly, which is not necessarily a very robust assumption. If the edge detection is not perfect, then we might need to use uncertain reasoning while building up objects. Consider Fig. 14.21 – a valid scene that can be labelled consistently.

However, if the image is slightly noisy at the top right vertex it might be uncertain whether it is a T, an arrow or a Y vertex. If we choose the last of these, it would have the same problems as with the first, inconsistent figure. If the edge detection algorithm instead gave probabilities, we could use these with Bayesian reasoning to get the most likely labelling.

However, the search process would be somewhat more complicated than Waltz’s algorithm.

Using Properties of Regions:

Edge detection simply uses lines of rapid change, but discards the properties of the regions between the lines. However, there is a lot of information in these regions which can be used to understand the image or to identify objects in the image. For example, in Fig. 14.22., it is likely that the regions labelled and B are part of the same object partly obscured by the darker object. We might have guessed this from the alignment of the two regions, but if they are of the same colour this reinforces this conclusion.

Also, the position and nature of highlights and shadows can help to determine the position and orientation of objects. If we have concluded that an edge joins two parts of the same object, then we can use the relative brightness of the two faces to determine which is facing the right. Of course, this depends on the assumption that the faces are all of similar colour and shade. Such heuristics are often right, but can sometimes lead us to misinterpret art image—which is precisely why we can see a two-dimensional picture as if it had depth.

Once we know the position of the light source (or sources), we can work out which regions represent possible shadows of other objects and hence connect them to the face to which they belong. For example, in Fig. 14.24., we can see from the different shadings that the light is coming from above, behind and slightly to the left. It is then obvious that the black region is the shadow of the upper box and so is part of the top face of the lower box.

Shadows and lighting can also help us to disambiguate images. If one object casts a shadow on another then it must lie between that object and the light. Also, the shape of a shadow may be able to tell us about the distance of objects from one another and whether they are touching.

6. Identifying Objects:

Finally, having extracted various features from an image, we need to establish what the various objects are. The output of this will be some sort of symbolic representation at the semantic level. We shall discuss three ways of doing this which operate on different sorts of lower-level representation.

Using Bitmaps:

The simplest form of object identification is just to take the bitmap, suitably thresholded, and match it against various templates of known objects. We can then simply count the number of pixels which disagree and use this as a measure of fit. The best match is chosen, and so long as its match exceeds a certain threshold it is accepted.

This form of matching can work well where we can be sure that shapes are not occluded and where lighting levels can be chosen to ensure clean thresholded images. However, in many situations the match will be partial, either because of noise, or because the object is partly obscured by another object. Simply reducing the threshold for acceptability will not work. Consider the two images, in Fig. 14.25. They have a similar amount of pixels in common, but the first is clearly a triangle like the template whereas the latter is not.

Neural network techniques can be used to deal with noisy pattern matching. Several different types could be used, but most work by taking a series of examples of images of the different objects and ‘learning’ how to recognise them. Depending on the particular technique, the examples may be of perfect or of noisy images.

After training, when the network is presented with an image it identifies the object it thinks it matches, sometimes with an indication of certainty. Neural network can often give accurate results even when there is a large amount of noise, but without some of the unacceptable spurious matches from crude template matching. One reason for this is that many nets effectively match significant features, such as the corners and edges of the triangle. This is not because they have any particular knowledge built in, but simply because of the low-level way which they learn.

One problem with both template matching and neural networks is that they are looking for the object at a particular place in the image. They have problems when the object is at a different location or orientation than the examples with which they are taught. One solution is to use lots of examples at different orientations.

For template matching this increases the cost dramatically (one test for each orientation). For neural nets, the way in which the patterns are stored reduces this cost to some extent, but if too many patterns are taught without increasing the size of the network, the accuracy will eventually decay.

An alternative approach is to move the objects so that it is in the expected location. In an industrial situation, this can often be achieved by using arrangements of chutes falling slope and barriers which force the object into a particular position and orientation. Where this is not possible, an equivalent process can be carried out on the image.

If we are able to identify which region of the image represents an object, then this can be moved so that it lies at the bottom left-hand corner of the image, and then : matched in this standard position. This process is called normalisation. A few stray pixels at the bottom or left of the object can upset this process, but alternative normalisation methods are less susceptible to noise, for example moving the centre of gravity of the object to the centre of the image.

Similar methods can be used to standardise the orientation and size of the object. The general idea is to find a co-ordinate system relative to the object and then use this to transform the object into the standard co-ordinate system used for the matching.

A typical algorithm works like this:

i. Select a standard point on the object; say its centre of gravity.

ii. Choose the direction in which the object is ‘widest’; make this the x-axis.

iii. Take the axis orthogonal to the x-axis as the y-axis.

iv. Scale the two axes so that the object ‘fits’ within the unit square.

The definitions of ‘widest’ and fit’ from steps 2 and 4 can use the simple extent of the object, but are more often based on measures which are less noise sensitive. The process is illustrated in Fig. 14.25. The resulting x and y-axes are called an object-centred coordinate system. Obviously all the example images must be transformed in a similar fashion so that they match!

Using Summary Statistics:

An even simpler approach than template matching is to use simple statistics about the objects in the image, such as the length and width of the object (possibly in the object-centred system), the number of pixels with various values, and so on.

For example, if we were trying to separate nuts and bolts on a production line, then those objects with an aspect ratio (ratio of length to width) greater than some critical value would be classified as nuts. Another example would be a line producing washers where we are trying to reject those which have not had their centres properly removed. Those objects with too many pixels would be rejected as defective.

Using Outlines:

While discussing template matching it is observed that issues of location, orientation and size independence cause some problems. These get far worse when we have to consider three-dimensional rotations. At this point techniques using higher-level features, such as those generated by Waltz’s algorithm, become very attractive.

In essence one is still template matching, but now the templates are descriptions of the connectivity of various edges. Of course, the same object will have different edges visible depending on its orientation. However, we can generate a small set of representative orientations whereby any object matches one or other after a certain amount of deformation.

Figure 14.25., shows some of the representative orientations of a simple geometric object. The example set of all possible orientations can be generated by hand, or (ideally) automatically using a three-dimensional geometric model of the object. The number of orientations can be reduced dramatically if we can make any assumptions, say that the object’s base stays on the ground, or that the camera position is within certain bounds.

When an object is to be recognised it is matched against the representatives of all known objects. Each vertex and edge in the image object is matched with a corresponding one in the example. If such a correspondence can be found then the match succeeds.

The exact positions of the vertices and edges do not matter, but the relative geometric constraints must match. For example, in Fig. 14.27., image (ii) matches the template (i). However, (iii) does not because vertex d is a fork-type junction rather than an arrow.

The matching process can be more or less precise. In addition to the types of junctions, it may use information such as whether certain lines are parallel or vertical. However, adding constraints tends to mean that there are more cases to consider when producing the set of all representative orientations

This type of method can be used to match more complicated objects. If an object consists of various pieces then the pieces can be individually identified by the above method and then a description of the connectivity of the pieces within the object matched against the known objects. This allows us to detect objects which change their shape, such as people.

Hand Written Recognition:

Finally, we look at the special case of handwriting and gesture recognition. Reading human handwriting has long been an aim of AI. This has recently come to fruition, and pen-based computing is now affordable and rapidly becoming ubiquitous.

These systems recognise both characters (to enter data) and gestures (such as scribble to mean ‘delete’). These systems either demand that the writer uses very stylised letters, or that new writers spend some time training the system. Even when the system is trained the writer must write each character individually.

One way to approach handwritten text is to take the bitmap generated by the path of the pen and then process it. Some applications demand this approach, for example if we want to interpret proof corrections written onto paper copies and then scanned in. However, for an interactive system this throws away too much useful information. If we trace the path of the pen, we not only have the lines already separated from the background, but we also know the direction of the strokes and the order in which they were written.

These path data differ from the grey-scale or bitmap images we have considered so far. Instead of a set of intensities at positions, we have a set of positions of the pen at various times. We can match the strokes in the image against those learnt for the particular writer. However, handwritten letters and gestures are never exactly the same and so we must accept some variation. There are various approaches to this.

One way is to look for characteristic shapes of strokes: lines, curves, circles, and so on. Characters and gestures are then described using this vocabulary.

A letter ‘a’ might be described as either a circle with a line connected to the right or a semi-closed curve with a line closing it to the right.

An alternative is to try and match the strokes against examples stored during training. However, not only may the written characters vary, but also the points at which the pen is sampled may differ between the training example and the one to be recognised. We therefore has to warp the points on the path and find intermediate points which match most closely the example. The idea is to choose points which were not in the sample, but might have been!

The process is illustrated in Fig. 14.28. The sample points on the original template and the character which needs to be matched are shown in (i) and (ii). The template sample points are overlaid as closely as possible (iii), and then intermediate points (the warp points) are chosen on the lines connecting the sample points.

These are chosen so as to be as close to the template points as possible. At the ends of the stroke the warp points must be chosen on the extrapolation of the last lines. Now the warped points are used rather than the originals in deciding whether or not the character really matches the template.

7. Multiple Images:

In this may be all we have to work on, for example a single photograph of a scene. However, in some circumstances we have several images, which together can be used to interpret a scene. On the one hand, this can make life more difficult (lots of images to process!). On the other hand, we may be able to extract information from the combined images which is not in any single image alone.

These multiple images may arise from various sources:

1. Different sensors may be viewing the same scene.

2. Two cameras may be used simultaneously to give stereo vision.

3. We may have continuous video of a changing scene.

4. A fixed camera may be panning (and possibly zooming) over a scene.

5. The camera may be on a moving vehicle or mounting.

The first of these, the combination of different sorts of data (for example, infrared and normal cameras), is called data fusion. It is especially important for remote sensing applications, such as reconstructing images from satellite data. Different sensors may show up different features; hence edges and regions in the two images may not correspond in a one-to-one fashion.

If the registration between the sensors is known (that is, we may knows how they overlap), then the images can simply be overlaid and the information from each combined. Often this registration process is the most difficult part and the high-level data may be used to aid this process. For example, terrain-following cruise missiles rely on the matching of ground features with digital maps to calculate their course and position.

The last three sources of multiple images have somewhat different characteristics, but are similar enough to discuss together. We will therefore look at stereo vision and moving images in more detail.

Stereo Vision:

Let us try a common trival experiment. Look out into the room and hold a finger in front of your face. Now close your each eye in turn. Your finger appears to move back and forth across the room. Because your eyes are at different positions they see slightly different views of the world.

This is especially important in determining depth. This can be visualised by the help of a simple experiment. Hold a pencil in one hand and try to touch the tip of the finger of your other hand with the point of the pencil. No problem? Try it with one eye closed. The properties of stereo vision are one of the clues our eyes use to determine how far away things are.

One way to determine depth is to use triangulation in a similar way to a surveyor. Assuming we have been able to identify the same feature in both images, we can work out the angle between the two and hence the distance (see Fig. 14.27.). To use this method to give exact distances, we need very accurate calibration of the cameras. However, even without such accuracy we can use this method to obtain relative distances.

So far we have assumed that we know which objects are the same in each image. However, this matching of objects between images is a difficult problem in itself. We can attack it at various levels. On the one hand, we can simply look for patterns of pixels which match one another in the grey-scale image. To do this, we work out the correlation between groups of pixels in the two images at small offsets from one another. Where the correlation is large, we assume that there is some feature in common. The size of the offset then tells us the disparity in angle between the two images.

This disparity will usually highlight the boundaries of objects, as the faces often have nearly constant intensity. Alternatively, we can wait until objects have been identified in each image and then match the objects. The low-level approach has the advantage that the information from both images can be used for subsequent analysis.

Moving Pictures:

There can be three types of movement:

1. Objects may move in the scene,

2. The camera may pan or zoom, and

3. The camera may be mounted on a moving vehicle.

These all lead to similar but slightly different effects.

For example, an object moving towards the camera will have a similar effect to zooming the camera, or moving the camera closer to the object. Of course, several or all of the above effects may occur and we may even have stereo cameras and multiple sensors! To simplify the discussion, we will consider principally the case of a single stationary camera.

One special advantage of a stationary camera is that it may be possible to calibrate the camera when the scene is ’empty’. For example, if the camera is used for surveillance in an airport departure lounge, we can take an image when the lounge is empty. This will contain the fixed furniture, pillars, and so on.

Then, when we look at an image of the lounge in use, we will be able to match it with the fixed image, and so identify the additional objects. In fact, it is not quite so easy! Changes in lighting levels, or indeed automatic level control in the camera, mean that we have to perform some adjustment to remove the fixed background.

Whether or not we have removed part of the image, some parts, of the image change more rapidly than others. It is these regions of change which correspond to the moving objects. As with stereo vision, we can use local correlation to determine where groups of pixels in the image correspond to the same feature.

However, with stereo vision we need only look for change in one direction, parallel to the separation of the ‘eyes’. In contrast, the objects may move in any direction. Furthermore, when an object gets closer or far than away, the edges of the object move in different directions as the image of the object expands or contracts. We can usually only calculate the direction of movement orthogonal to the edge. Any movement parallel to the edge is invisible. Again, in the stereo case, this is only a problem where a long flat object is being viewed.

This all sounds quite complicated. Happily some things are easier! Because we have many images in sequence we can trace known objects. That is, once we have identified an object moving in a particular direction, we have a pretty good idea where to find it in the next image.

It is worth noting for both moving images and stereo vision the magnitude of change which is likely between images. Imagine we are tracking someone walking across the airport lounge. Assume that the person is 10 meters from the camera and walking at a brisk 1.5 m/s. At 15 frames per second the person will move through an angle of 0.01 of a radian (about half a degree)—between frames.

If the camera has a 60° viewing angle and we are capturing it at a resolution of 512 x 512 pixels, the person will move five pixels between frames. So, we have to do comparisons at one, two, three, four and five pixel offsets to be able to detect such movements – calculated all over the image 15 times per second! Even then, what about someone moving closer to the camera? Clearly, we have to design the algorithms carefully in order to save some of this work.